TL;DR SEO test

New pages can appear almost instantly in Google SERPs for keyword searches. It appears it takes longer for Google Search Console to report these pages as indexed and that operators such as site: info: and cache: do not work immediately.

Hypothesis: Elements such as Google Search Console listing a page as ‘indexed’ may come after the ‘second stage’ of indexing, when a page has been rendered by Google.

Test: Publish new page, using javascript to change page title. Observe if page is reported in Google Search Console or works with cache: site: info: operators before the javascript title is included in the SERPs.

If the title of this page includes “PHASE ONE” in the SERPs, the javascript has not been executed and should not appear as indexed in GSC.

If the title of this page includes “PHASE TWO” in the SERPS, the javascript has been executed.

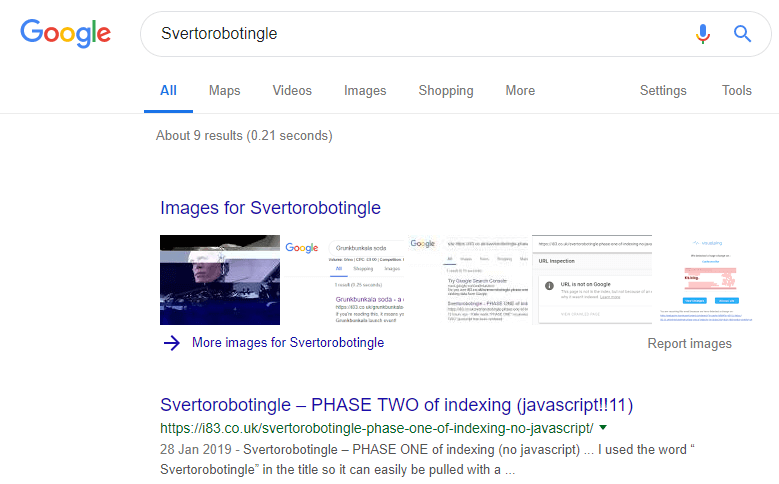

I used the word “Svertorobotingle” in the title so it can easily be pulled with a Google keyword search.

Is this test perfect?

No. If you think of any way it isn’t perfect, please make sure you tweet this publicly to let everyone know how smart you are, though.

PL;DR: SEO test

This blog post is it’s own SEO test.

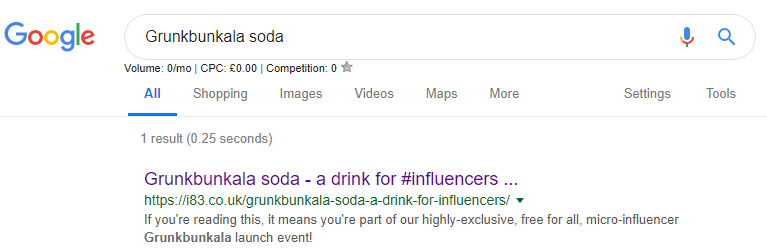

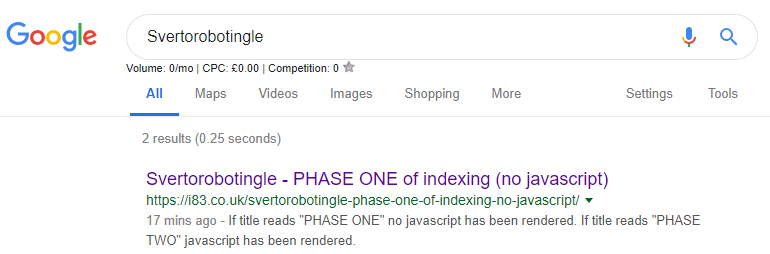

When publishing some new pages this weekend, I noticed something mildly interesting – Google would return results for my newly published URLs when doing a keyword search and when searching for the URL

Google returning new URL result with URL

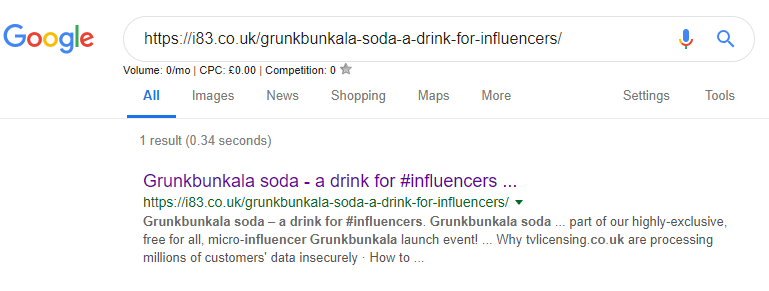

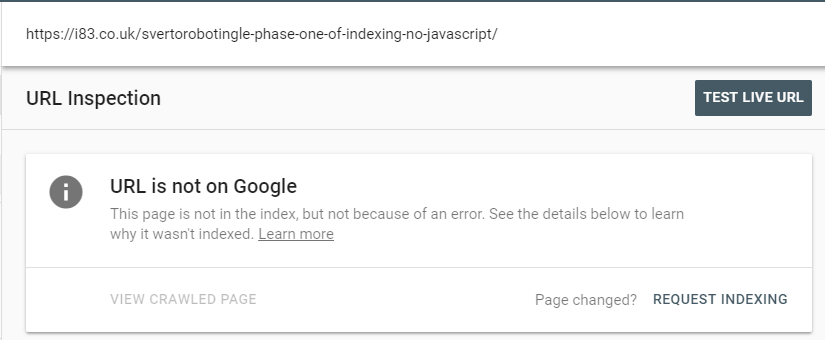

This is not unexpected, but what I did find interesting is that Google Search Console said the URL was not indexed:

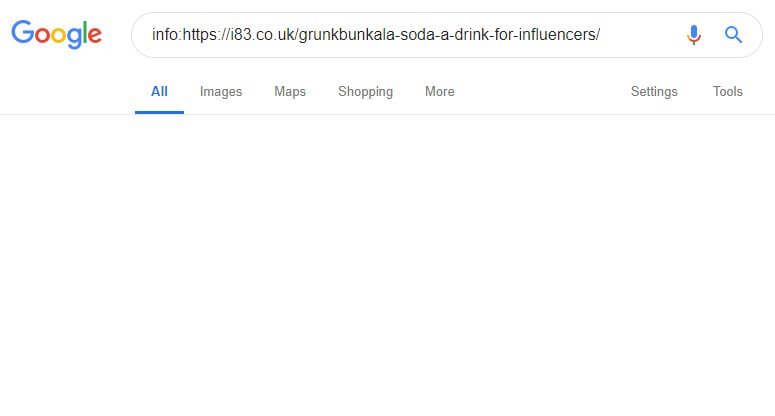

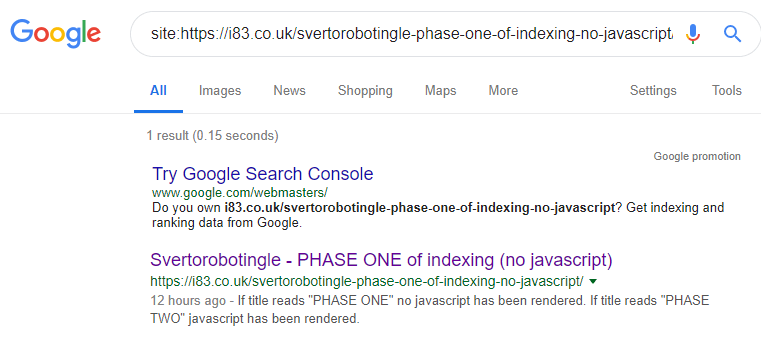

I also noticed that operators such as info: and site: would not return any results for this URL:

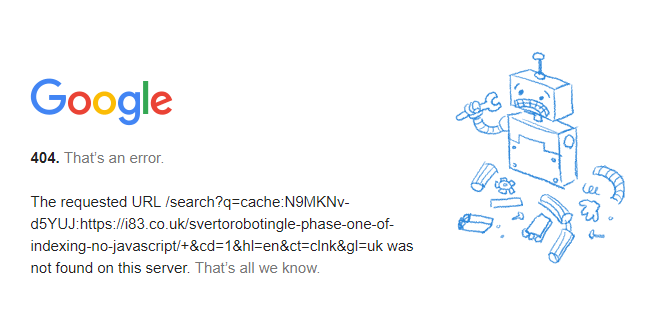

When requesting the cached version of the page, Google also presented a 404, saying the page had not been cached:

Asking Google what’s up

I concluded there was a possibility that Google was using initial crawl data from Googlebot to power search results, while Google Search Console and perhaps commands such as site: info: and cache: only kicked in once the site had been fully rendered by Google.

There is a lot of assumptions there, so I asked Google for their view, Barry came back with confirmation of what I was thinking – about two stages of indexing. While I’ll point out I was thinking this before Barry chipped in, there isn’t much point because I only had the clarity to think this because I had read his post about Javascript and indexing which I recommend reading.

John Mueller from Google kindly replied, which I think I can paraphrase as “The web is huge, Google is complex, it’s not like we just run a script to index the web, so these things slot into place as and when they can”.

Sounds like a perfectly reasonable and probably correct explanation to me. However, Barry said later on Slack this would be a “nice test”, which was apparently enough to make me do it.

Sounds like the SERP commands and GSC data might depend on 2nd stage indexing whereas the Googlebot visit is only the 1st stage of indexing.

— Barry Adams 🧩 (@badams) January 28, 2019

Lots of things run in parallel, they’re just not all prioritized the same. (And sometimes there are quirks that shift things around a little bit in timing.)

— 🍌 John 🍌 (@JohnMu) January 28, 2019

Results

28/01/2019 @ 19:54:

Page published and submitted for indexing in Google Search Console

28/01/2019 @ 20:01

Super interesting! The page immediately ranked for the keyword, URL and the site: / info: commands. Google Search Console still reports the URL is not indexed and Google also offers no cache. The non-javascript title is appearing in the SERPs.

For keyword “Svertorobotingle”: YES

For URL: YES

GSC reporting indexed: NO

Site: working: YES

Info: working: YES

Cache: working: YES

Javascript title indexed: NO

29/01/2019 @ 11:11:

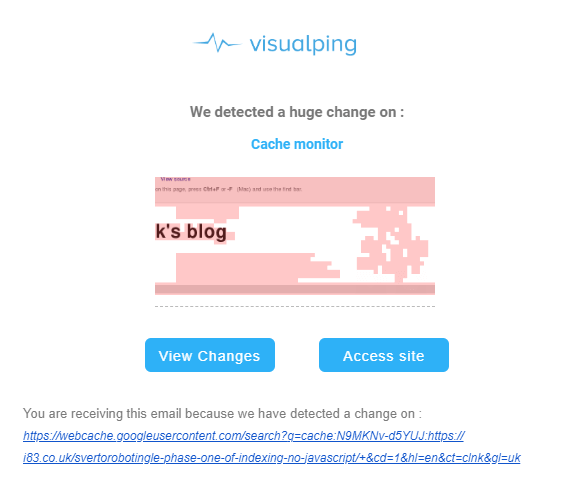

Had an alert from VisualPing that the cache: was not working, manually trying the cache: link still gave 404.

For keyword “Svertorobotingle”: YES

For URL: YES

GSC reporting indexed: NO

Site: working: YES

Info: working: YES

Cache: working: YES/NO(!)

Javascript title indexed: NO

29/01/2019 @ 18:10:

Cache seems to have propagated and is working on any request. GSC still reports page is not indexed.

For keyword “Svertorobotingle”: YES

For URL: YES

GSC reporting indexed: NO

Site: working: YES

Info: working: YES

Cache: working: YES

Javascript title indexed: NO

01/02/2019 @ 08:10:

GSC Reports paged are indexed, the title is still the non-javascript title.

For keyword “Svertorobotingle”: YES

For URL: YES

GSC reporting indexed: YES

Site: working: YES

Info: working: YES

Cache: working: YES

Javascript title indexed: NO

21/02/2019 @ 09:52:

Google has finally cached the Javascript title!

For keyword “Svertorobotingle”: YES

For URL: YES

GSC reporting indexed: YES

Site: working: YES

Info: working: YES

Cache: working: YES

Javascript title indexed: YES

It took 24 days, but we got there:

Conclusion

Google functions such as showing indexed in GSC, presenting cached page, information on operators and ranking for URL/keyword are independent, in at least in the fact there appears to be no inter-dependence on one happening before another. I assume this is down to the sheer scale of these systems as John Mueller hinted at, but it does mean you don’t have to worry if “x hasn’t done y” yet.

It was interesting to see that it took 24 days for the Javascript version of the page to get rendered, another indicator that we should not be relying on client-side Javascript to do things on our sites wherever possible.